We recently had to revisit one of the reports of our system (a financial solution) because it was taking too long, anywhere between 20 to 40 minutes depending on the size of the Mutual/Hedge Fund Company). Basically, the report contained information about the positions of each account in a fund, things like Units held, Average Cost, Market Value, Series. Unfortunately, these numbers cannot be obtained through simple SQL aggregation functions like SUM or AVG and crunching the numbers results in an exercise a little harder than that which includes going through the transactions history of each account.

Just for perspective, the system controls investors' holdings for different Canadian Mutual Fund and Hedge Fund companies (and I can't reveal much more because well, it's the Financial Industry after all and I don't wanna die young). These companies are usually divided internally in multiple funds, with different trading styles, goals etc.

For reporting the positions of an entire Mutual/Hedge Fund company, our code would go in a loop crunching the numbers for the positions inside each of the funds of the company. This operation would take place in a synchronous way, going fund by fund one at a time in a foreach loop. We determined this was the area of the code that could be improved the most. In reality there was no need for one fund to have to wait for the execution of others. Each fund could have its own thread of execution running in parallel which would take advantage of the 16 core processor server that would execute one thread per core, and en-queue the other threads.

The problem is that the actual spitting out of the report can only happen after all the threads have terminated, otherwise the report would be incomplete. Essentially, once all the threads are created and started, the main execution thread needs to hang in there waiting for all the threads to finish. And just then, with all the final numbers, create the excel report. Needless to say, we never know in advance how long each thread is going to take, and we also know that differences in total assets between funds can be enormous resulting in a great disparity of execution times.

There are several ways to do this in .NET, but I believe the easiest one is using the Task.WaitAll routine of the System.Threading.Task.Task class of the framework. The simplicity and clarity of the code is simply amazing, which makes the code easier to maintain:

Here's the PositionsReportExportForFund object. It loads the positions for a specific Fund. We need to create one instance of this object per fund, and invoke its LoadPositions method, which will load all the information and store it in the HistoricalPositions field of the object.

Then we have the Company object, whose code creates the different threads of execution and spits out the report. This is the chicken:

So, that's it! Or so you would think.

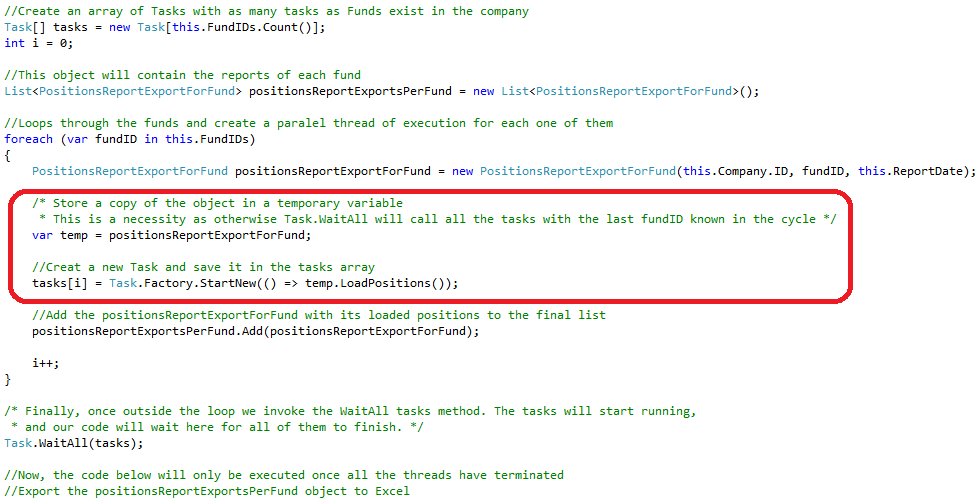

This code should be enough to make the whole thing work, but upon testing it I ran into a very strange issue. In spite of the fact that I am looping through the different Fund ID values, for some reason, all the tasks of the array run for the same Fund ID, which is the last one in the collection. I never understood why, but fortunately other people have run into the problem already and found a solution. The intermediary object needs to be saved into a temporary variable. Looks useless, and well,....not pretty, but so be it:

So, there you have it. A simple implementation that allows a main thread of execution to wait for all other parallel tasks to finish before proceeding. The need for having to assign a temporary object to me is due to a bug in the framework. Hopefully they fix it in future releases.

As a result, the position reports are now taking 15 - 40 seconds as the code takes much more advantage of the power of the web-server and users are much happier.

Hope it helps.

No comments:

Post a Comment